In this part of our web scraping series we’ll touch on nuances of web scraping Youtube with Python. Read on for useful insights, tips and code from a pro scraper!

What Is Web Scraping?

Using a technique called web scraping, you can automatically collect data from websites. It entails the use of computer programmes called web scrapers or spiders to browse websites and extract data such text, images, links, and other content.

Depending on the target website and the requested data, there are various methods for web scraping. It is simpler to extract data from some websites because they offer it in a structured style, such as through an API. Other times, web scrapers must parse a website’s HTML code in order to collect data, which might be more difficult.

Python, R, and Selenium are just a few of the computer languages and tools that can be used for web scraping Youtube. Web scrapers can use these technologies to automate the procedure of viewing websites, submitting forms, and data extraction.

Can You Actually Do Web Scraping For Money?

Data scraping is crucial these days – it makes it possible for organisations, individuals, and academics to swiftly and effectively collect data from the internet. Web scraping is now a necessary method for gathering and evaluating data due to the growing amount of information available online.

Here are some common commercial uses of web scraping:

1. Market research: Businesses can use web scraping to gather market data and competitive intelligence, such as pricing information, product reviews, and customer sentiment.

2. Lead generation: Web scraping can help businesses generate leads by gathering contact information from websites, such as email addresses and phone numbers.

3. Content aggregation: Web scraping can be used to collect content from multiple sources, such as news articles, social media posts, and blog posts, to create a comprehensive resource for a specific topic.

4. Data analysis: Web scraping allows researchers and analysts to collect and analyze data for various purposes, such as studying consumer behavior, tracking trends, and conducting sentiment analysis.

Overall, online scraping is a powerful tool that can speed up decision-making, offer insightful business data, and reduce time spent on research. Web scraping should, however, be used ethically and responsibly, following the terms of service of the websites being scraped and safeguarding the privacy of people whose data may be implicated.

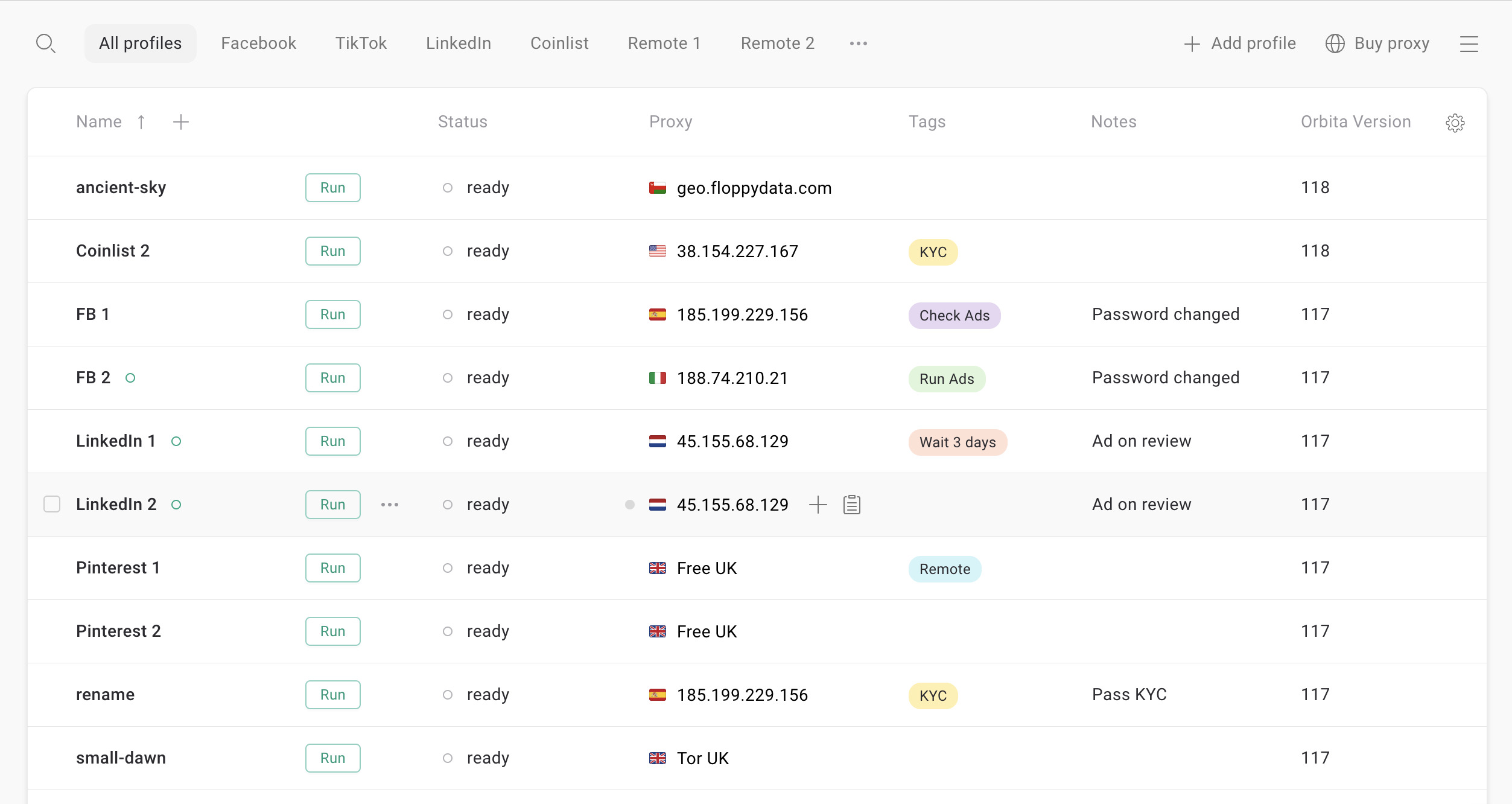

What Is GoLogin?

GoLogin is a cloud-based solution for web scraping and managing several online identities. It offers users a private and secure environment for web browsing, the ability to create and manage several browser accounts, and the ability to automate web scraping operations.

Users can establish and maintain several profiles with GoLogin that each have their own set of cookies, browser settings, and online identities. This feature enables users to sign in to multiple accounts on the same website at once without being seen. This might be helpful for companies and people who, for instance, need to maintain many social media or e-commerce accounts.

GoLogin includes online scraping functionality in addition to managing numerous identities, enabling users to use pre-built or custom scrapers to harvest data from websites. Businesses and researchers who need to collect data for market research, competitive analysis, or other purposes may find this valuable.

GoLogin is an all-inclusive solution for automating web scraping chores and managing online identities. It provides a private and secure environment for managing several profiles while browsing the web, making it a useful tool for both enterprises and individuals that need to manage multiple online identities while collecting data from the web.

How GoLogin Helps Developers?

GoLogin can help developers scrape websites more efficiently and securely in several ways:

- Secure browsing environment: GoLogin provides a secure and private browsing environment for web scraping, protecting user data and preventing detection by websites that may attempt to block scraping activities.

- Multiple browser profiles: GoLogin allows developers to create and manage multiple browser profiles, each with its own set of cookies, browser settings, and online identity. This allows developers to log in to multiple accounts on the same website simultaneously without being detected.

- Automated web scraping: GoLogin offers pre-built and customizable web scrapers, allowing developers to automate web scraping tasks and extract data from websites more efficiently.

- Proxy server integration: GoLogin supports integration with proxy servers, allowing developers to scrape websites from different IP addresses and locations, which can help avoid detection and prevent websites from blocking scraping activities.

Overall, GoLogin can help developers scrape websites more efficiently and securely by providing a secure and private browsing environment, allowing multiple browser profiles and automating web scraping tasks, and supporting integration with proxy servers.

Using Selenium for Web Scraping Youtube on Windows

There are many technologies that may be used to perform web scraping, which is a potent method for gathering data from websites. Web scraping can be done with Selenium, a well-liked automation tool.

The capability to interact with web pages, model user behaviour, and automate operations are just a few of the characteristics that make it an effective web scraping tool.

Set Up Selenium On Your Computer

To use Selenium with Python , you’ll need to have Python installed on your computer. You can download Python from the official Python website. Once you have Python installed, you’ll need to install the Selenium package by running the command pip install selenium in a command prompt or terminal window.

Importing Driver

Selenium requires a web driver to interact with web pages. You can download the web driver for your preferred web browser from the official Selenium website. Once you’ve downloaded the web driver, you’ll need to specify its location in your code by adding a few lines of code at the beginning of your script.

from selenium import webdriver

driver = webdriver.Chrome('/path/to/chromedriver')

#

#

#

driver.quit()

How to set up and use GoLogin for web scraping Youtube?

Step 1: Create an account

Making an account on GoLogin’s website is the initial step in using the service. You can accomplish this by going to the GoLogin website and creating an account using your email address. You can log in to the platform and begin configuring your browser profiles after creating an account.

Step 2: Set up a browser profile

GoLogin employs a browser profile as a distinct identity to simulate actual user behaviour. Choose the browser you want to use, such as Google Chrome or Mozilla Firefox, before you can create a profile for it. The profile can then be altered by include user agents, fingerprints, and IP addresses. These features will assist in making the profile appear more authentic, lowering the chance of getting discovered.

Step 3: Configure the proxy settings

You can modify the proxy settings for your browser profile to further lower the chance of detection. By doing this, you can give every website you visit a distinct IP address, which makes it more challenging for them to monitor your online behaviour.

Step 4: Start web scraping

You can begin web scraping Youtube after setting up your proxy settings and browser profile. You need to do this by writing a web scraping script in a computer language like Python. The script should access the website and extract the necessary data using the GoLogin-created browser profile.

Web Scraping Youtube Steps (without GoLogin)

Generating API Key

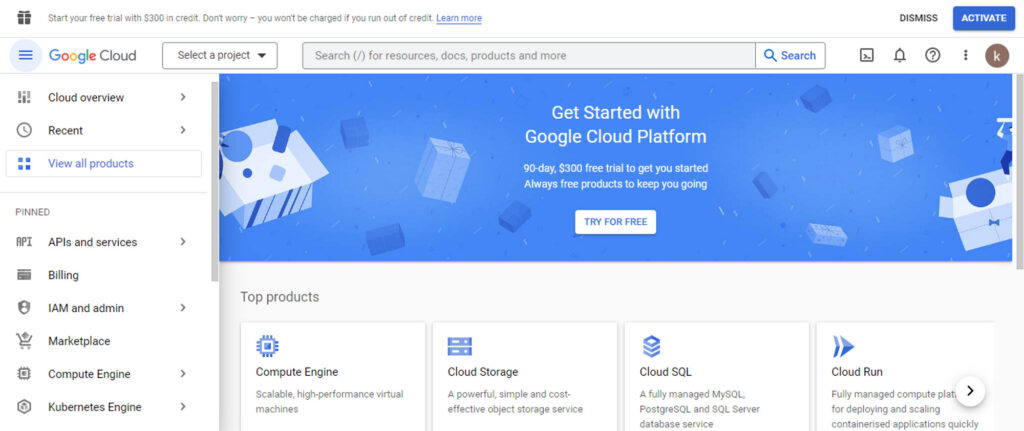

To generate a YouTube API key for data scraping, follow these steps:

1. Go to the Google Developers Console at https://console.developers.google.com

3. Name your project and select a billing account (if you don’t have one, you will need to create one).

4. Once your project is created, click on the “Enable APIs and Services” button.

5. Search for “YouTube Data API” and select it.

6. Click on the “Enable” button.

7. Next, you will need to create a new API key. Click on the “Create credentials” button and select “API Key.”

8. You can choose to restrict the API key to specific IP addresses, websites, or apps if desired.

9. Once you have created the API key, copy it and use it in your data scraping code to authenticate your requests to the YouTube Data API.

Accessing YouTube data requires an API key, which is the first step in the process. The next step is to learn how to use the API by identifying the resources and methods required and how to write code to access the data. Google provides documentation for the YouTube Data API that can be found by searching for it in a browser.

The documentation contains information on various resources that can be used to access different types of data from YouTube. For example, the channels resource can be used to access channel statistics such as the channel name and total number of videos using the list method. Google provides sample code for calling the API and accessing data for different programming languages, including Python.

The documentation also includes a guide section with quick start guides for different programming languages. For example, the Python quick start guide includes information on installing the necessary library for using an API key to access YouTube data.

It is important to note that there is a quota and limit on the number of requests that can be made per day using the YouTube Data API. Different methods have different costs associated with them, which can be found in a table provided by Google. If the daily request count exceeds the limit, access to the API will be denied.

Importing the libraries

We makes use of the googleapiclient module to import the build function which is used to create a client instance for interacting with Google APIs.

from googleapiclient.discovery import build import pandas as pd

Using Api Key

We define an API key and a list of YouTube channel IDs. It then uses the build() function from the googleapiclient.discover module to create a connection to the YouTube Data API. The connection is authenticated using the API key, and specifies that version 3 of the API should be used. The resulting youtube object can then be used to make API calls to interact with the specified YouTube channels.

api_key = '****'

channel_ids = ['UCZFMm1mMw0F81Z37aaEzTUA',

'UCnz-ZXXER4jOvuED5trXfEA',

'UCw8ZhLPdQ0u_Y-TLKd61hGA',

'UCTGlBH7oKcVxsxGqMqYopIQ']

youtube = build('youtube', 'v3', developerKey=api_key)

Scraping

This code defines a function called get_channel_stats that takes two arguments, youtube and channel_ids. The youtube parameter is an instance of the googleapiclient.discovery module which is used to make requests to the YouTube API. The channel_ids parameter is a list of YouTube channel IDs for which we want to retrieve statistics.

The function makes a request to the YouTube API using the channels().list() method and passes the part parameter to specify which parts of the channel data we want to retrieve (snippet, content details, and statistics), as well as the id parameter to specify the channel IDs we want to retrieve data for. The execute() method is called on the request object to send the request and get a response.

The response contains data for all the channels specified in the channel_ids list. The function loops over each channel in the response and extracts relevant data such as the channel name, subscriber count, total views, and total videos. It then stores this data in a dictionary object and appends it to the all_data list.

Finally, the function returns the all_data list containing statistics for all the channels specified in the channel_ids list. This function is useful for retrieving statistics for multiple YouTube channels at once and can be used for data analysis or visualization.

def get_channel_stats (youtube, channel_ids): all_data = [] request = youtube.channels().list ( part='snippet, contentDetails, statistics', id=','.join(channel_ids)) response = request.execute() for i in range(len(response ['items'])): data = dict (Channel_name = response ['items'][i] ['snippet']['title'], Subscribers = response ['items'][i] ['statistics']['subscriberCount'], Views = response ['items'][i] ['statistics']['viewCount'], Total_videos = response ['items'][i]['statistics']['videoCount']) all_data.append(data) return all_data

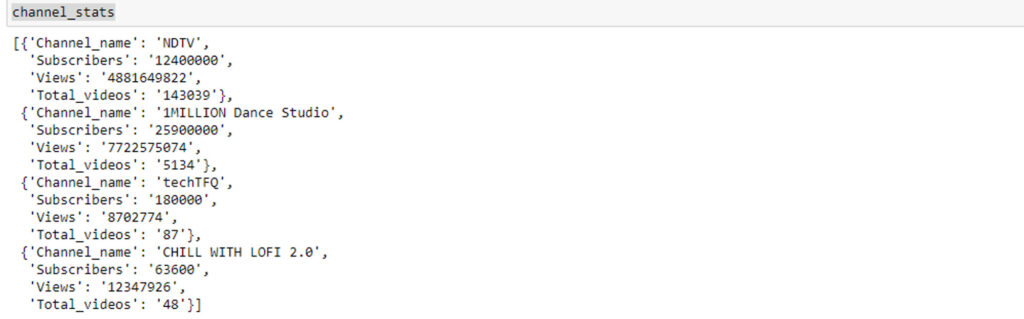

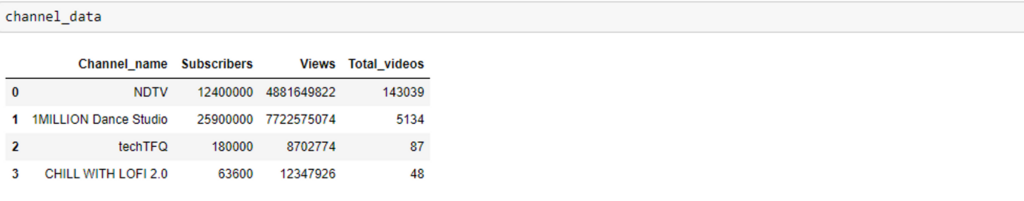

Output

channel_stats=get_channel_stats(youtube, channel_ids) channel_stats

Web Scraping Youtube Using Gologin

The main benefits of using Gologin for scraping YouTube include easy integration with Python, the ability to handle complex authentication workflows, and the ability to bypass Google’s anti-bot measures. Gologin provides a user-friendly interface that simplifies the process of authenticating with Google, which is necessary for accessing the YouTube API.

Additionally, Gologin’s advanced features, such as session persistence and multi-factor authentication, allow for more robust scraping and reduce the risk of account suspension. Overall, Gologin streamlines the process of scraping YouTube data and improves the reliability of the scraping process.

import requests

import json

from gologin import GoLogin

# Set up GoLogin credentials

gologin_email = 'your_gologin_email'

gologin_password = 'your_gologin_password'

gologin_profile_id = 'your_gologin_profile_id'

# Set up YouTube credentials

youtube_api_key = 'your_youtube_api_key'

# Set up GoLogin session

gl = GoLogin()

session = gl.get_session(gologin_email, gologin_password, gologin_profile_id)

# Define function to get channel stats

def get_channel_stats(channel_id):

url = f'https://www.googleapis.com/youtube/v3/channels?id={channel_id}&key={youtube_api_key}&part=statistics'

response = session.get(url)

data = json.loads(response.text)['items'][0]['statistics']

return data

# Example usage: get stats for PewDiePie's channel

channel_id = 'UC-lHJZR3Gqxm24_Vd_AJ5Yw' # PewDiePie's channel ID

stats = get_channel_stats(channel_id)

print(stats)

Here’s how the code works:

- First, we import the necessary libraries: requests, json, and gologin.

- We set up our GoLogin credentials by specifying our GoLogin email, password, and profile ID.

- We also set up our YouTube API key.

- We create a GoLogin session using the gl.get_session() method, passing in our GoLogin credentials.

- We define a function called get_channel_stats() that takes a channel ID as input and returns the channel’s statistics (subscribers, views, etc.) as a dictionary.

- In the example usage, we call the get_channel_stats() function with PewDiePie’s channel ID and print the resulting statistics.

Output

Youtube Scraping Policy x GoLogin

Developers can access data in a controlled and allowed manner thanks to YouTube’s API offerings. Although scraping without authorization is expressly forbidden by YouTube’s Terms of Service, the platform takes steps to identify and stop scraping, including IP blocking and CAPTCHA tests.

To avoid potential violations that can lead to account suspension, legal action, or other repercussions, it is imperative that you adhere to the YouTube API terms and conditions.

Gologin offers a means to cycle IP addresses and user agents, which can help prevent detection and potential blocking from YouTube, and can assist with YouTube scraping restrictions. Also, Gologin can aid in maintaining and saving authentication cookies, which might lessen the necessity of signing in again throughout scraping sessions.

Gologin also offers a solution for dealing with captcha difficulties that may arise during scraping sessions. Scraping operations can be made more effective and less likely to be flagged by YouTube’s policies by using Gologin. To avoid any legal difficulties, it is still crucial to adhere to YouTube’s regulations and standards regarding scraping.

Tips And Best Practices For Web Scraping Youtube

- Respect website policies: Before scraping data from a website, make sure to review its terms of service and privacy policy. Some websites may prohibit web scraping or require permission before data can be scraped.

- Avoid overloading servers: Web scraping can put a strain on website servers, so it’s important to avoid scraping large amounts of data or making too many requests in a short period of time. Consider using a delay between requests or scraping data during off-peak hours.

- Handle errors and exceptions: Web scraping can be prone to errors and exceptions, such as server errors, connection timeouts, and invalid data. Make sure to handle errors and exceptions gracefully, such as retrying failed requests or logging errors for later analysis.

- Use a user-agent string: A user-agent string is a piece of code that identifies the web scraper to the website. Using a user-agent string that is commonly used by web browsers can help avoid detection and prevent websites from blocking scraping activities.

- Use proxies: Proxies can be used to rotate IP addresses and avoid detection by websites that may attempt to block scraping activities. However, make sure to use reputable proxy providers and follow their terms of service.

- Observe ethical and legal standards: Web scraping can raise ethical and legal concerns, such as respecting the privacy of individuals whose data is being scraped and complying with data protection laws. Make sure to scrape data only for legal and ethical purposes, and to obtain consent or anonymize data if necessary.

By following these tips and best practices, web scrapers can avoid getting blocked by websites, handle errors and exceptions, and maintain ethical and legal standards when scraping data.

Conclusion

In conclusion, web scraping is a powerful tool for extracting data from websites for various purposes such as market research, competitor analysis, and more. However, it requires proper planning and execution to avoid getting blocked by websites and maintain ethical and legal standards.

GoLogin is a cloud-based tool for managing multiple online identities and web scraping, providing a secure and private browsing environment, allowing multiple browser profiles and automating web scraping tasks, and supporting integration with proxy servers. This makes it a valuable tool for businesses and individuals who need to manage multiple online identities and gather data from the web.

Overall, using Python and GoLogin for web scraping can help organizations and individuals extract valuable insights and information from the web more efficiently and securely, while adhering to ethical and legal standards.

Reference source:

- Zhao B. Web scraping //Encyclopedia of big data. – 2017. – Т. 1.

- Glez-Peña D. et al. Web scraping technologies in an API world //Briefings in bioinformatics. – 2014. – Т. 15. – №. 5. – С. 788-797.

- Sirisuriya D. S. et al. A comparative study on web scraping. – 2015.

- Chapagain A. Hands-On Web Scraping with Python: Perform advanced scraping operations using various Python libraries and tools such as Selenium, Regex, and others. – Packt Publishing Ltd, 2019.

- Nyamathulla S. et al. A Review on Selenium Web Driver with Python //Annals of the Romanian Society for Cell Biology. – 2021. – С. 16760-16768.

Download GoLogin and enjoy safe web scraping Youtube with our free plan!

Read some more useful content on this topic:

Scraping LinkedIn: Pro Scraper’s Guide + Code

Scraping Reddit: Pro Scraper’s Guide + Code

Scraping Twitter: Pro Scraper’s Guide + Code