This article has been updated and edited in January 2025.

Web scraping, as we all know, it’s a discipline that evolves over time, with more complex anti-bot countermeasures and new web scraping tools open source to use.

Let’s find together what code based tools can’t be missed for a python web scraper developer in 2025.

Scrapy

Web Scraping + Python = Scrapy, by definition. Born in 2009, It’s the most complete framework for web scraping projects, that gives the developer plenty of options to control every step of the data acquisition process.

Open source web scraping tool, maintained by Zyte (formerly known as Scrapinghub), has the great advantage that there’s plenty of documentation, tutorials, and courses on the web to start with. Being written in Python allows starting instantly to create your first spider within minutes.

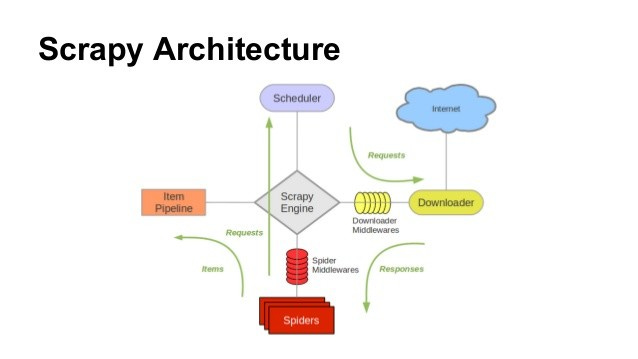

Another great advantage is its modular architecture, described in the picture below and well explained in the official documentation.

Scrapy architecture as described on their documentation

Scrapy architecture as described on their documentation

Let’s briefly summarize the workflow.

- The Engine gets the initial Requests from the Spider, passes them to the scheduler, and then asks for the next requests to continue web crawling.

- The Scheduler returns the requests to make to the Engine that sends them to the Downloader, via its Middlewares. The Downloader returns a Response that goes to the Engine via its Middlewares.

- Again, the Engine sends the Response to the Spider via its Middlewares and Spider returns Items and next Requests.

- Finally, the Engine then sends Items to Items Pipelines and then asks for more Requests to crawl.

Most of the magic of Scrapy happens in the two middlewares: in the Downloader Middlewares, you can add some manipulations to Requests and Responses. As an example, you can filter the Requests before they are sent to the website, maybe because they are duplicated. Or maybe you want to manipulate the Responses before they are used by the spider.

In the Spider Middlewares, you can post-produce the Spider output ( Items or Requests) and handle Exceptions.

Items are the standard output of Scrapy spiders and in the Item Pipelines there are options and functions to manage the scraped data – output of the scrapers, like file formats, field separators, and so on. This makes Scrapy extremely useful for structured data from web pages with several columns per row.

Advanced Scrapy Proxies

A little self-promotion here, this is a python package for Scrapy written by me that handles lists of proxies in several formats and uses it in your Scrapy project. You can use a list accessible on a public URL, a list on the local machine, or a proxy directly in the options. Far from perfect but we use it daily in production.

Scrapy Splash

Scrapy is great but has some limitations, the biggest one is that it reads only static HTML.

To overcome this limit, the scrapy-splash plugin adds the ability to make Splash API calls inside your Scrapy project.

Splash is a lightweight browser with an HTTP API, implemented in Python 3 using Twisted and QT5.

This downloader middleware modifies the Requests, routing them to a Splash server specified in the Scrapy options, so the response contains the result of the Javascript executions.

Microsoft Playwright

In case there’s the need for a real browser to scrape some website, Microsoft Playwright is the newest solution we can rely on.

It is not the only automated test solution that allows us to script a browser execution and scrape its content, there’s Selenium too as an example, but it’s the easiest to use and at the moment the one that guarantees more successful responses in case of strong anti-bot software.

Its installation package already includes the most popular browsers and when included also the playwright-stealth package in the execution, the browser is almost indistinguishable from a real human installation.

Wappalyzer Python

I recently discovered this Python wrapper for Wappalyzer.

Wappalyzer is a tool that discovers the technology stack behind a website, like the anti-bot software and common e-commerce platform.

This wrapper in python user interface allows you to programmatically study your target website from command line.

At the moment this seems to me one of the best web scraping tools open source web crawlers for python web scrapers, but if something is missing or you’re using something else and want to reach out, feel free to write us.

AI Powered Scraping Tools: Necessity In 2025?

While traditional tools like Scrapy and Playwright remain essential, AI-powered scraping solutions are revolutionizing how we approach data extraction projects.

These new generation tools use machine learning models to automatically understand website structures, adapt to layout changes, and generate scraping code with minimal human intervention.

The key advantage is their ability to “learn” from different website patterns and automatically adjust their scraping strategies, significantly reducing development time and maintenance overhead.

Some notable features of modern AI-powered scrapers include:

- Automatic selector generation: Instead of manually writing CSS or XPath selectors, these tools can identify and generate selectors by analyzing visual patterns and HTML structure

- Self-healing scripts: When websites change their structure, AI models can automatically adapt the scraping logic without developer intervention

- Intelligent rate limiting: Advanced algorithms that dynamically adjust request patterns to avoid detection while maintaining optimal scraping speeds

- Natural language interfaces: Ability to describe what data you want to extract in plain English, with the AI generating the appropriate scraping code

The shift toward AI-assisted scraping represents a natural evolution in web scraping technology, making it more accessible to developers of all skill levels while improving reliability and reducing maintenance costs.

However, it’s important to note that these tools work best when combined with traditional scraping frameworks rather than replacing them entirely.

Some More Scraping Trends To Follow In 2025

Most of these we will mention are not new, but the importance of these points is growing steadily.

- For instance, AI-driven scrapers can learn from previous scraping attempts, improving their strategies over time.

- Additionally, the integration of natural language processing (NLP) capabilities allows scrapers to better understand and extract relevant information from unstructured data, making data collection more efficient.

- Another significant addition to the toolkit is the rise of antidetect solutions, such as GoLogin. It’s not open source, however it has a free plan with API access suitable for basic scraping. These tools help users maintain anonymity while scraping by managing digital fingerprints and browser attributes. This is crucial as websites become more adept at detecting automated scraping activities.

GoLogin can be an absolute necessity if you’re targeting websites with good anti-bot protection – in fact, most of them.

GoLogin can be an absolute necessity if you’re targeting websites with good anti-bot protection – in fact, most of them.

Frequently Asked Questions

What is web scraping and why is it important?

What are open-source web scraping tools?

What is the advantage of using Scrapy as a web scraping tool?

How does Selenium differ from other web scraping tools?

This article was kindly provided by Pierluigi Vinciguerra, web scraping expert and founder of Web Scraping Club. Follow this link to see the original post.

Download GoLogin privacy browser here – and enjoy scraping even the most advanced websites with our free plan!